Computing in the Arts (CITA) students create capstone projects that synthesize what they have learned about Computer Science and their chosen arts concentration area.

This page features highlights of student capstones over the years that I have taught the capstones. I have excerpted text from the students’ final reports to briefly summarize their works. Students who are in the Honors College augment their capstone project with a thesis research experiment and essay.

Several projects feature applications of Data Science.

Sabrina Warner – Coastal Winds (Spring 2019)

Coastal Winds generates original abstract art using wind data collected from buoys across the country. The information presented can be helpful for surfers since every surf break needs certain wind conditions to have good surf and the wind conditions. The project was implemented using Processing and applied Perlin noise to ocean buoy data.

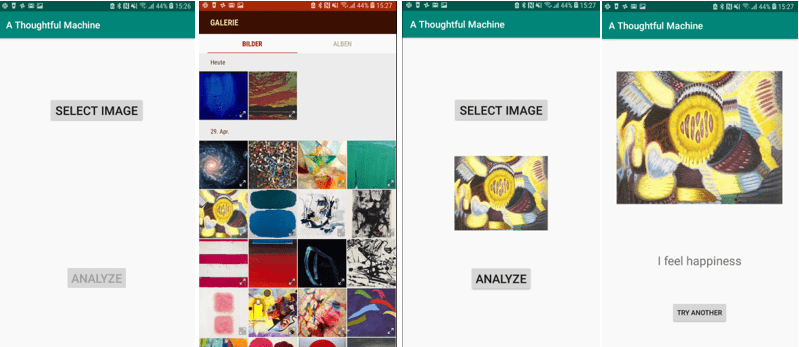

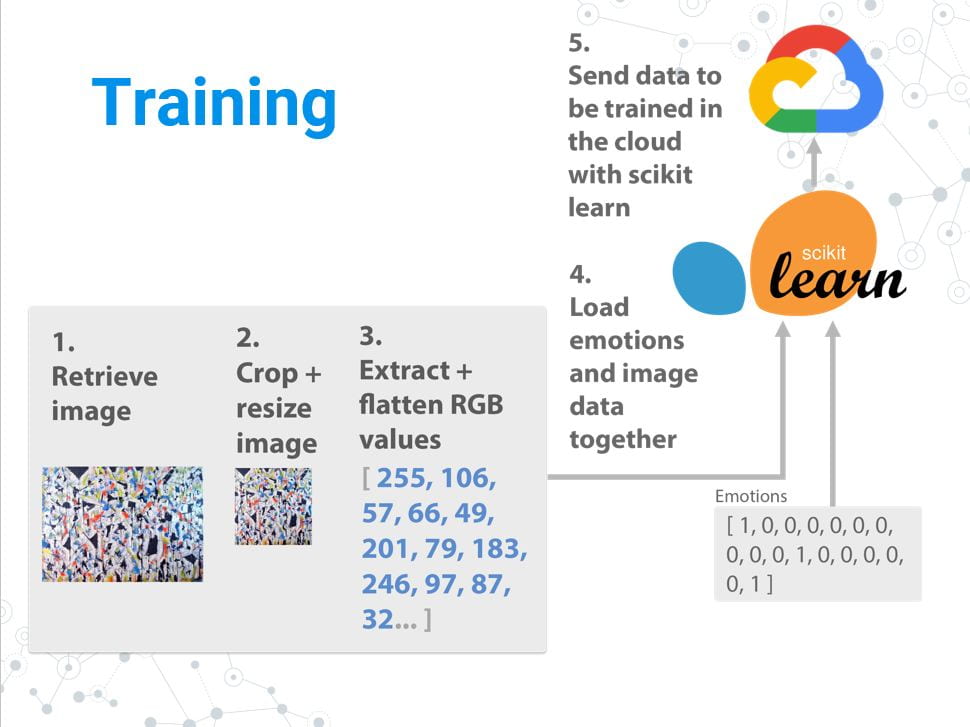

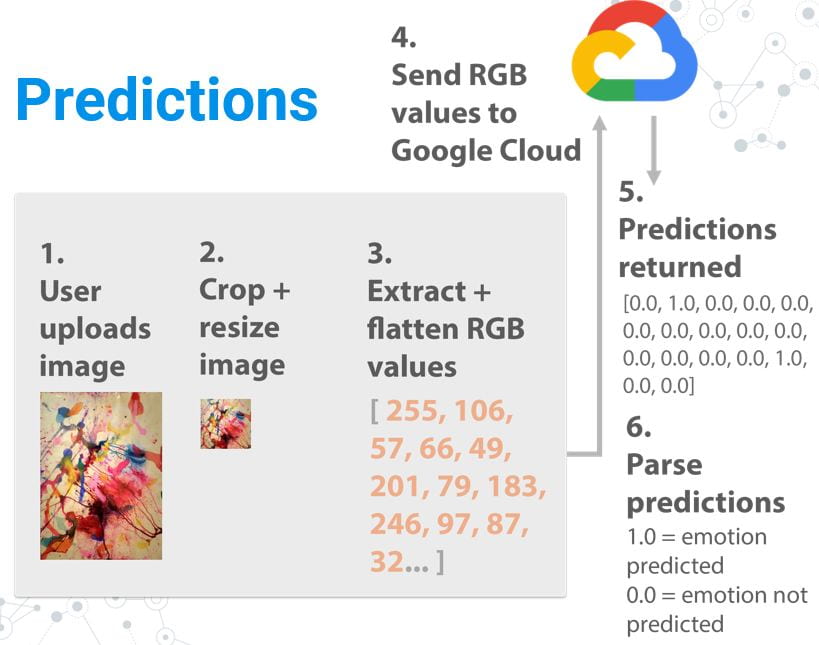

Sarah Miles – Thoughtful Machine (Spring 2019)

Thoughtful Machine explores the relationship between art, emotions, and computers through machine learning. Train a machine to find connections between images and emotions, so it can predict emotions for images it’s never seen.

Mariam Bello-Ogunu – Exploratory visualization of cinematic editing patterns (Spring 2019)

Thinking Like a Director is a Web app allows a user to explore how various cinematic editing patterns are used in movies. A cinematic editing pattern is a sequence of types of shots designed to convey a desired narrative effect such as showing opposing viewpoints or intensifying action. This project visualizes the patterns dataset produced by INRIA researchers (Hui-Yin Wu and Marc Christie – Analysing cinematography with embedded constrained patterns 2016). The project is coded in JavaScript using the High Charts data visualization library.

Mandy Smoak – Study of the Rule of Thirds and its use in Cinematography (Honors Spring 2016)

Aesthetic images evoke an emotional response that transcends mere visual appreciation. In this work we develop a computational means for evaluating the composition aesthetics of a given image based on measuring one of the most well-grounded composition guidelines—the rule of thirds [Figure 1]. We propose analyzing samples of frames from works by Hitchcock and Wes Anderson, with the goal to objectively and computationally prove the subjective observation that Hitchcock tends to follow the rule of thirds, while Anderson breaks it. We will first use a face detection API based upon OpenCV and Python. We will then create a program to iterate through many frames within a single film—specifically Alfred Hitchcock’s Psycho—that will locate the actor’s face, store the center focal point of the face, and compute the distance from both the vertical third lines and the horizontal third lines. These calculations will then be used to compare how closely Anderson and Hitchcock’s compositions follow the rule of thirds.

Mitchell Boyer – Explorable Portfolio of Autonomous 3D-Scuplted Creatures (Spring 2018)

I wanted to create a platform to proudly display some of my works in a unique setting. With this in mind I decided to create my own game environment in which players are encouraged to explore and discover my 3D creature artwork. The player is able to access the creature gallery through the pause menu and navigate around the static models, enabling them to appreciate the game objects not only as NPC creatures, but also as individual works of art. I created original C# scripts for each variety of NPC that existed within my game in order to give my creatures believable interactions amongst themselves and with the player. For example, some predatory creatures will attempt to hunt down smaller prey items, which will in turn try to escape their pursuers.

Miah Bundy – VR Simulation to Promote Understanding of Mental Illness (Spring 2018)

My original expectations for this project were as follows: demonstrate how difficult doing theoretically simple tasks can be while living with mental illness, visually portray how depression and anxiety affect one’s perceptions and thoughts, and exemplify that stigmas against mental illness are dangerous. The program will keep track of three different attributes of the character: energy, depression, and anxiety. The image effects will be correlated to actual health phenomena related to these qualities. Energy will be seen as the amount of focus the character has; if their energy is diminished, a blur effect is seen. Loss of energy is correlated to an inability to concentrate, so this effect seemed to be the best fit. As the character’s depression fluctuates, so does the color saturation. People with depression do not actually see color change, however this is supposed to represent the dark, looming mood one experiences. People who suffer from anxiety can experience body tremors, and thus I chose to use a camera effect for increased anxiety. In this way, this project is a mixture of a realistic simulation of everyday activities and a symbolic visualization of mental illness.

Miah used Unity Game Engine to write custom image shaders to create visuals in the style of Vincent Van Gogh.

Julie Chea – Step In Immersive puzzle Game (Spring 2018)

Step In is an immersive and interactive puzzle game that creates an environment where the player is placed within a realm of information, encouraging enlightenment and empathy. In today’s society, technology has desensitized people to many issues in the world, and I want to explore ways that technology can bring that human connection back. The player manipulates their own body to fit into the context into the presented image in order to exceed to the next level. In order to conceive understanding, I sought to use minimal hardware so that the players are more focused on the content rather than figuring out how to work hardware. This space is created by a projector and a Microsoft Kinect One. Using Processing software, technology is blended with art as my personal art is incorporated as each of the puzzle’s settings, and code is used to produce an environment that encourages higher thinking on sensitive subjects. It is designed to intermix reality with art work to produce a unique experience for each individual user.

The second image depicts “Dragon Dance.” This frame allows the player to become one with a cultural celebration, intertwining themselves within a brightly colored backdrop.

The last image is influenced by Dr. Martin Luther King’s March for Voting Rights in Selma, AL. This image allows the player to link arms with two other people to represent unity towards justice.

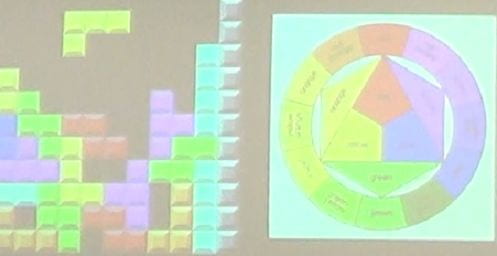

Jo Culbertson – Tetricolor: A Color Theory Game in the Style of Tetris (Spring 2018)

In Tetricolor, the game will appear to be a typical Tetris-style game, but instead of matching shapes to make a line, the user will be matching different randomly generated colors to create a match that follows one of the color schemes in the color theory. The proper match will depend on which level the player has chosen. The player will begin the game by going to the webpage (which will include an informative information screen) and hit the start button when they are ready to begin. The player will then use the ‘a’ and ‘d’ buttons to move the shape left and right as it slowly falls. When the user has created an accepted match, the shapes will disappear, and a predetermined amount of points will be added to the user’s score.

Jo used JavaScript and Web3D graphics to code this educational game.

Savannah Floyd – Abstract Art from Mobile Phone Walks (Spring 2018)

This application combines performance art with visual art. The user walking around while the application collects their location data is the performance art aspect, while the image generated at the end of the location collection is the visual art aspect, creating a hybridization of the two. The art of Sol Lewitt inspired my design. Many of his wall drawings are comprised of lines and colors, all composed in various ways that make them stand out from one another. One piece in particular, his Wall Drawing 692 (Below), was a great source of inspiration for what I originally envisioned the user’s generated artwork to look like once they have ended the navigation tracking and the image is created.

Savannah’s project was developed using the Android App Studio and the Google Maps software toolkit to access map coordinates of the users’ walking paths.

Maddie Maniaci – Abstract Visualization of Motion-Captured Dance (Spring 2018)

My capstone project explores motion capture by fusing dance and technology to create a movement based abstract piece of art. My choreography was heavily inspired by water as my movement vocabulary is fluid. For the project, I used the Unity game engine alongside the Optitrack motion capture system. The artwork itself is abstract with an inspiration of water movement. In the beginning, when thinking about how I wanted my artwork to appear to an audience, I was heavily inspired by the Asphyxia project. I loved how the dancer looked like they were made up of particles/atoms sporadically moving throughout the dancer’s body.

Curtis Motes – BoomerAangVR (Spring 2018)

BoomerAangVR is an exciting game that synthesizes arts and computing by creating a virtual artistic space that is fun to explore and contains the elements of a traditional platformer game. The game invites the user to observe my art while exploring the mechanics of teleporting in VR. The levels and painted backgrounds are designed to represent the four seasons of Earth in the impressionist style of painting. This design choice was influenced by my art mentor Francis Sills, whose themes of the low country and subtle seasonal change I sought to emulate. In BoomerAangVR, the user’s body is tracked and moved in virtual space within the confines of the room by the HTC Vive Cameras. The user can look around the game with the headset on by

turning their head around. Using the Vive controllers, the player can grab and throw a boomerang disc object. After throwing the boomerang, the player can teleport to its location at any point in its flight. The player can also use the trigger on the left controller to return the boomerang near the player. The objective of the game is to navigate throughout four distinct levels using this teleport mechanic, avoiding falling off the platforms in each level. Each of the four levels is themed to represent one of the four seasons: Summer, Winter, Spring, and Fall.

Johnny Bello-Ogunu – European Bubble Art (Spring 2018)

European Bubble art is a virtual reality experience where a person can get a brief overview about various artistic and musical pieces. The user explores various works of art by selecting and “popping” bubbles. Bubbles appear around the user, and the user pops the bubble by clicking a button on the HTC Vive. After the bubble pops a 3D model of artwork from an European architecture of my choice will appear in front of the user. The user can then tap on the model and a brief fact about that current model in front of you. While the user is looking at the work of art, music will be playing the background that represents the time period in which the art created in. Before popping the bubble, the user can interact with bubble by holding on their hand for a bit or putting two bubbles together. I am integrating art with technology through putting the user in a virtual reality space while immersing them in an interactive world of various artistic works and music.

Rachel Steele – Text Sentiment Analysis to Image Filter Effect

My project manipulates an image to represent emotional values present in accompanying text. It combines the use of technology and artistic strategies to create an appealing end result. The technology comes in with the analysis of lexical data to calculate dominant emotional tones in text. The art comes in by applying mutations to the image based on algorithms I have created. The text processing was done in Java to analyze the dominant emotion in an input passage of text. Java writes the data results to a file, which Processing then reads and uses to manipulate the provided image. A pre-determined image processing effect is then applied depending on what was found to be the dominant emotion in the text. For happiness, apply posterize image filter, for anger, displace randomly selected pixels by a random vector, and for fear blend the photo with itself to create a distorted sort of “aftershock” effect.

Jesse Taylor – Immersive VR Japanese Language (Spring 2018)

The goal of my project was to create an immersive language learning game that uses a system like spaced-repetition to help the player remember vocabulary. The way spaced repetition functions is by calculating the most optimal time to remind the user of the information they had just covered. It works by reminding the user of the information roughly around the time in which they might forget it, putting it fresh back into memory.

The scenarios the player is put into are scenes varying from the common living room, and a bedroom. The rendering style took influences from cel shaded works, which incorporate a lot of flat colors. The player then has the ability to explore the scene(s) in which objects with the Japanese text above them appear in the scene. The text is using the phonetic alphabet hiragana, so there are some assumptions made that you can at least read the phonetic alphabet. The Vive VR controllers are what allow the player to pickup, throw, stack, etc. the individual objects. The player can then interact with the door in each of the scenes to traverse to other scenes, of which will spawn a new set of objects based on weights.

Ron Taylor – Music Composition Generated from VR Gameplay (Spring 2018)

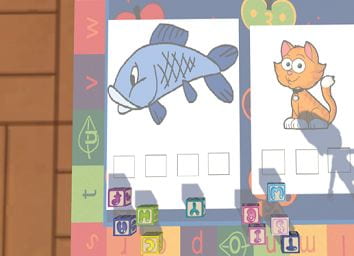

The user would interact with the project in that they would play the game: Classroom Chaos and based on their actions within the environment they would be determined naughty or nice. Whether they are naughty or nice will influence how the music is composed, if they were naughty the music will be more tense and dark rather than upbeat or peaceful. In this VR kindergarten classroom, I had developed three mini-games for the purposes of this project that upon completion will send an OSC message to Jython with a value representing how you completed it, good or bad. One of the three mini-games would be a Spelling blocks where the user is tasked with spelling the word for the image on the rug using the empty squares on the rug, the rug has two images which are a fish and cat, seen below.

I used Jython music to set up all the pre-composed material which consists of five measures for introduction to key, two measures for ending the piece, and twelve measures in between selected randomly with the user’s morality value for each mini-game. I made about thirty-two measures for the random selection from morality derived from mini-games. and communicate with Unity through Open Sound Control for the game-state variable that determines if the player is naughty or nice.

Noah Albertsen (Fall 2018, Honors College)

This project algorithmically generates imagery that is then translated into traditional relief prints. This algorithm was created within the open-source Processing.py development environment. It was used to generate multi-layer images, with each layer being its own unique color. The user is able to change parameters such as the color palette used, the general style, and the number of layers. Once the layers have been generated, they are translated into image files. These image files will then be sent to a laser cutter, and they will be engraved onto wood blocks. This work is similar to that of Vera Molnár, a French media artist from Hungary, who learned the programming languages Fortran and Basic, and used a plotter to create computer graphic drawings.

Jessica Mack (Fall 2018)

Microcosm is a physics simulation generated for dynamic backdrops in performances. Users will project the animation simulation onto surfaces like the wall or floor in order to create an immersive environment for performing art. The purpose of Microcosm is to provide an immersive environment for performing art for small groups or solo performers. The physics simulation will be created within the Unity Game Engine. The floor projection will be a pre-programmed animation of water caustics and ripples, while the wall projection will contain anti-gravity water particles moving through the air in the beach scene. The design of this project was inspired by Cirque du Soleil’s performance of Toruk and Canion Shijirbat from Mongolia’s Got Talent, who created a digitally animated backdrop that went along with choreography that he performed.

Alex Smith (Fall 2018)

Code Cavern is an educational game aimed to teach kids basic programing concepts through fun and engaging gameplay. The project design is similar to existing educational programming products Kodable and Code & Go Robot Mouse. Users control an adventurer who progresses through dungeon levels by applying programming constructs to solve puzzles. The player will drag and drop the arrow action buttons at the top of the screen to the empty slots on the right. These actions must be in the correct order to guide the character in the right direction.